Do AI Benchmarks Still Matter? The Evidence for and Against Public Leaderboards

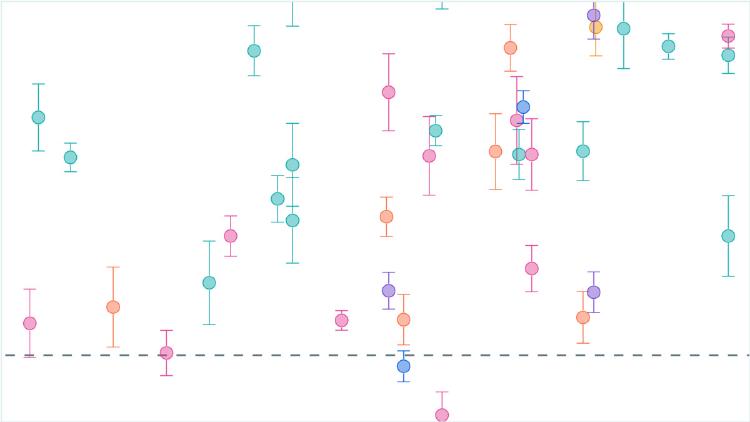

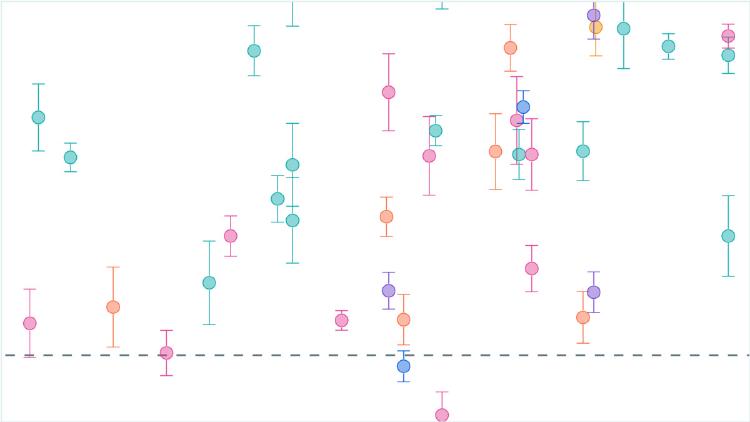

A data-driven look at benchmark contamination, leaderboard gaming, and whether public AI benchmarks can still tell us anything useful about model capabilities.

A data-driven look at benchmark contamination, leaderboard gaming, and whether public AI benchmarks can still tell us anything useful about model capabilities.

Rankings of the best open source LLMs you can run on home hardware - RTX 4090, RTX 3090, Apple M3/M4 Max - organized by VRAM tier with real-world token/s benchmarks and quality scores.

Rankings of the best AI models for long-context tasks, measuring retrieval accuracy, reasoning, and comprehension across massive context windows from 128K to 10M tokens.

Anthropic's new mid-tier model matches Opus 4.6 on coding benchmarks, ships a million-token context window, and keeps the same $3/$15 pricing as its predecessor.

Comprehensive ranking of the top large language models in February 2026, combining multiple benchmarks including reasoning, coding, knowledge, and multimodal capabilities.

A plain-English guide to AI benchmarks like MMLU, GPQA, SWE-Bench, and Chatbot Arena Elo, explaining what they measure and why no single score tells the whole story.