Claude Sonnet 4.6 Review: The Workhorse That Ate the Flagship

Anthropic's mid-tier model delivers 98% of Opus performance at one-fifth the cost, with a 1M token context window and near-parity on coding and computer use benchmarks.

Anthropic has a cannibalization problem, and it is entirely self-inflicted. Just twelve days after launching Claude Opus 4.6 - a model I scored 9.3/10 and called one of the most capable AI systems available - the company released Claude Sonnet 4.6, a mid-tier model that matches its flagship on nearly every metric that matters to working developers. The price? One-fifth of Opus. If you are building with Claude today, this is almost certainly the model you should be using.

What Sonnet 4.6 Actually Is

Sonnet 4.6 is the latest entry in Anthropic's mid-range tier, sitting below the premium Opus line and above the lightweight Haiku models. It launched on February 17, 2026, and is available across all Claude plans (including the free tier on claude.ai), Claude Code, the Anthropic API, and major cloud platforms including AWS Bedrock, Google Cloud Vertex AI, and Microsoft Azure Foundry.

The pricing is unchanged from Sonnet 4.5: $3 per million input tokens and $15 per million output tokens. That is five times cheaper than Opus 4.6 ($15/$75) and roughly half the cost of OpenAI's GPT-5.3 Codex ($6/$30). With prompt caching, costs drop by up to 90%. With batch processing, another 50%.

The headline feature is a 1 million token context window (currently in beta), making it the first Sonnet-class model to handle full codebases, long contracts, or dozens of research papers in a single prompt. The default remains 200K tokens, with the extended window available via API flag.

The Benchmarks Tell a Story

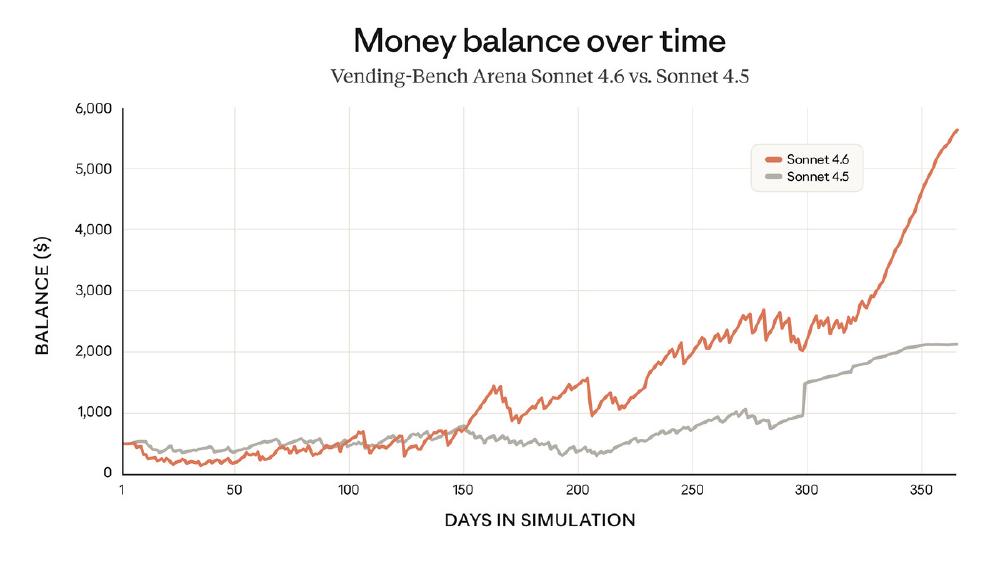

The numbers are the reason this review exists. Sonnet 4.6 does not just close the gap with Opus - it practically eliminates it on the benchmarks developers care about most.

| Benchmark | Sonnet 4.6 | Sonnet 4.5 | Opus 4.6 |

|---|---|---|---|

| SWE-bench Verified | 79.6% | 70.3% | 83.8% |

| OSWorld (computer use) | 72.5% | 42.0% | 72.7% |

| ARC-AGI-2 (reasoning) | 58.3% | 24.4% | ~65% |

| Math | 89% | 62% | - |

| Terminal-bench | 52.5% | 40.5% | 56.7% |

| TAU-bench Airline | 62.0% | 57.6% | 67.8% |

| TAU-bench Retail | 67.0% | 63.2% | 67.5% |

| GDPval-AA (Elo) | 1633 | - | 1606 |

The SWE-bench jump from 70.3% to 79.6% is a 13% relative improvement over Sonnet 4.5. But the standout is OSWorld, the benchmark that measures a model's ability to autonomously operate computer interfaces - clicking buttons, navigating applications, completing multi-step workflows. Sonnet 4.6 scored 72.5%, essentially tied with Opus 4.6 at 72.7%, and demolishing GPT-5.2's 38.2%. For context, when computer use first launched with Sonnet 3.5 in October 2024, the score was 14.9%. That is a fivefold improvement in sixteen months.

The ARC-AGI-2 result deserves special attention. This abstract reasoning benchmark went from 13.6% (Sonnet 4.5) to 58.3% - a 4.3x improvement in a single generation. It is the largest single-generation gain Anthropic has published on any benchmark.

On GDPval-AA, which measures real-world office and knowledge work tasks, Sonnet 4.6 actually leads Opus 4.6 with an Elo of 1633 versus 1606. This is the first time a Sonnet model has outscored its Opus counterpart on any major benchmark.

Coding: Where It Matters Most

For developers - and given this site's audience, that means most of you - the coding benchmarks are the real story. Sonnet 4.6 scores 79.6% on SWE-bench Verified, putting it within 4.2 points of Opus 4.6 and comfortably ahead of GPT-5.2 and Gemini 3 Pro.

In Claude Code testing, developers preferred Sonnet 4.6 over Sonnet 4.5 70% of the time and over the previous flagship Opus 4.5 59% of the time. Early adopters report that the model reads context more effectively before modifying code, consolidates shared logic rather than duplicating it, and generates noticeably more polished frontend output with better layouts, animations, and design sensibility.

The model particularly excels at complex code fixes that require searching across large codebases - exactly the kind of task where the 1M context window earns its keep. For developers using AI coding assistants like Cursor, Windsurf, or Claude Code, the recommendation from multiple reviewers is the same: switch immediately. Sonnet 4.6 is faster, cheaper, and arguably smarter for practical development work than flagship models released just months ago.

Where GPT-5.3 Codex still holds an edge is in terminal-based workflows (77.3% on Terminal-Bench 2.0 vs. Sonnet 4.6's 52.5%) and multi-language real-world tasks. Gemini 3.1 Pro dominates competitive and algorithmic coding, with a LiveCodeBench Elo of 2,887. But for the daily work of building and maintaining software, Sonnet 4.6 sits at or near the top - and at the lowest cost of any frontier-class model.

Computer Use: The Quiet Revolution

While coding gets the headlines, Sonnet 4.6's computer use capabilities might be its most consequential feature. The 72.5% OSWorld score means the model can successfully operate computer interfaces - navigating legacy software, filling forms, completing multi-step workflows - with a reliability that starts to look useful rather than merely impressive.

This matters because almost every organization runs legacy systems that were built before APIs existed: insurance portals, government databases, ERP systems, hospital scheduling tools. A model that can look at a screen and interact with it opens all of these to automation without building custom integrations. At $3 per million input tokens, that automation becomes cost-effective even for relatively mundane tasks.

The 94% accuracy on the Pace insurance benchmark - a real-world test of computer use in an actual enterprise environment - suggests this is not just a benchmark game. There are production-grade applications waiting.

That said, Anthropic is candid that the model "still lags behind most skilled humans" at computer use, and prompt injection remains a risk when the model interacts with web content. The improvements over Sonnet 4.5 in prompt injection resistance are significant, but mitigation strategies remain essential.

The Token Efficiency Problem

Every good review needs to dig into the weaknesses, and Sonnet 4.6 has a notable one: it is verbose.

On the GDPval-AA benchmark, Sonnet 4.6 consumed 280 million tokens to achieve its leading Elo score. Sonnet 4.5 used 58 million on the same evaluation - a 4.8x difference. For tasks involving adaptive thinking or extended reasoning, Sonnet 4.6 can burn through up to four times more tokens than its predecessor.

This creates a counterintuitive situation. Sonnet 4.6 costs $3/$15 per million tokens versus Opus 4.6's $15/$75. But if Sonnet uses 4.5x more tokens to complete the same task, the all-in cost can actually exceed Opus. In practice, this means engineers need to think about model routing - using Sonnet for the vast majority of tasks where it is both cheaper and sufficient, but switching to Opus for deep reasoning chains where token efficiency matters.

The latent.space analysis captured this well: developers should treat Sonnet 4.6 as the default, with Opus 4.6 as a surgical tool for tasks requiring maximum reliability in 20+ step reasoning chains or deep scientific analysis (the GPQA Diamond gap remains wide at 74.1% vs. 91.3%).

New Capabilities Worth Noting

Beyond raw performance, Sonnet 4.6 ships with several meaningful new features:

- Adaptive thinking: The model dynamically adjusts reasoning depth based on task complexity, similar to Opus 4.6's implementation

- Context compaction (beta): Automatically summarizes older conversation context as conversations approach limits, effectively extending usable context length

- Improved instruction following: Early testers report noticeably better adherence to constraints and formatting requirements

- Enhanced prompt injection resistance: Major improvements over Sonnet 4.5, though not yet at Opus 4.6 levels

- Code execution and web search: Now generally available, with automatic code-based filtering of search results to keep context clean

Strengths

- Near-Opus performance on coding (79.6% SWE-bench) and computer use (72.5% OSWorld) at one-fifth the price

- 1M token context window opens full-codebase analysis at the mid-tier price point

- Best-in-class on GDPval-AA knowledge work tasks (Elo 1633)

- 4.3x improvement on ARC-AGI-2 in a single generation

- Available on free tier, making frontier-class coding AI accessible to everyone

- Same $3/$15 pricing as Sonnet 4.5 - no premium for the massive upgrades

Weaknesses

- Token consumption up to 4.8x higher than Sonnet 4.5 on complex reasoning tasks

- All-in cost can exceed Opus for some workloads despite lower per-token pricing

- GPQA Diamond score (74.1%) shows a significant gap with Opus (91.3%) on deep scientific reasoning

- Terminal-bench performance (52.5%) lags behind GPT-5.3 Codex (77.3%)

- Early post-launch reports of structured output errors and hallucinated function names (reportedly fixed)

- Extended context (200K+) carries premium pricing that erodes the cost advantage

Verdict: 9.0/10

Claude Sonnet 4.6 is the most impressive mid-tier model release I have reviewed. It does not merely narrow the gap with flagship models - it closes it on the benchmarks that matter most to developers and enterprise users. The fact that it outscores Opus 4.6 on office task benchmarks while costing 80% less is, frankly, extraordinary.

The token efficiency issue is real and worth monitoring, particularly for teams running complex agentic workloads at scale. And if your work demands peak scientific reasoning, Opus 4.6 remains the better choice. But for the overwhelming majority of use cases - coding, computer use, document analysis, knowledge work - Sonnet 4.6 delivers roughly 98% of Opus performance at a fraction of the cost.

The strategic implication is clear: Anthropic is compressing the performance gap between its tiers faster than anyone expected. If you are choosing an LLM for a new project in 2026, Sonnet 4.6 should be your starting point, not Opus. That is both a compliment to this model and a challenge for Anthropic's own pricing strategy. When your mid-tier product is this good, how do you justify the premium?

For an overview of how to navigate the current model landscape, see our guide to choosing an LLM in 2026. And if cost-per-task matters to your deployment, check our cost efficiency leaderboard for the latest comparisons.

Sources:

- Introducing Claude Sonnet 4.6 - Anthropic

- Claude Sonnet 4.6: Complete Guide to Benchmarks, Features, and Pricing - NxCode

- Claude Sonnet 4.6 vs. GPT-5: The 2026 Developer Benchmark - SitePoint

- Anthropic's Sonnet 4.6 Matches Flagship AI Performance at One-Fifth the Cost - VentureBeat

- AINews: Claude Sonnet 4.6 Clean Upgrade - Latent Space

- High Token Usage in Claude Sonnet 4.6 Limits Value for Long Reasoning Tasks - Geeky Gadgets

- Claude Sonnet 4.6 is the New Best Model for Writing Scrapers - Zyte

- Gemini 3.1 Pro vs Claude Sonnet 4.6 vs GPT-5.3 Coding Comparison - Bind AI

- Anthropic Releases Claude Sonnet 4.6 - CNBC

- Claude Sonnet 4.6: Benchmarks, Pricing and Complete Guide - Digital Applied