Taalas Exits Stealth With $169 Million to Hardcode AI Models Into Silicon

Toronto startup Taalas raises $169M to build custom chips that permanently etch AI model weights into transistors, claiming 73x faster inference than Nvidia's H200 at a fraction of the power.

TL;DR

- Taalas raised $169M (Series B led by Quiet Capital and Fidelity) to build chips that permanently encode AI model weights into transistors

- Its HC1 chip generates 17,000 tokens per second on Llama 3.1 8B, claiming 73x faster than Nvidia's H200 at one-tenth the power

- Founded by three ex-Tenstorrent/AMD veterans; team of 25 has spent only $30M of $200M+ raised

- First product ships now; a 20B-parameter chip is expected this summer, frontier models by year-end

Most AI chip startups pitch themselves as "Nvidia, but better." Taalas is pitching something stranger: what if the model is the chip?

The Toronto-based startup emerged from stealth on February 19, disclosing $169 million in Series B funding and a first product that claims to generate 17,000 tokens per second on Meta's Llama 3.1 8B. That is 73 times faster than Nvidia's H200 GPU on the same model, according to the company, while consuming roughly one-tenth the power.

The investors backing that claim include Quiet Capital, Fidelity, and Pierre Lamond, a semiconductor veteran who was an early investor in National Semiconductor and Cypress. Total funding now exceeds $200 million across three rounds. What is unusual: the company says it has spent only $30 million of that total so far.

The Technology

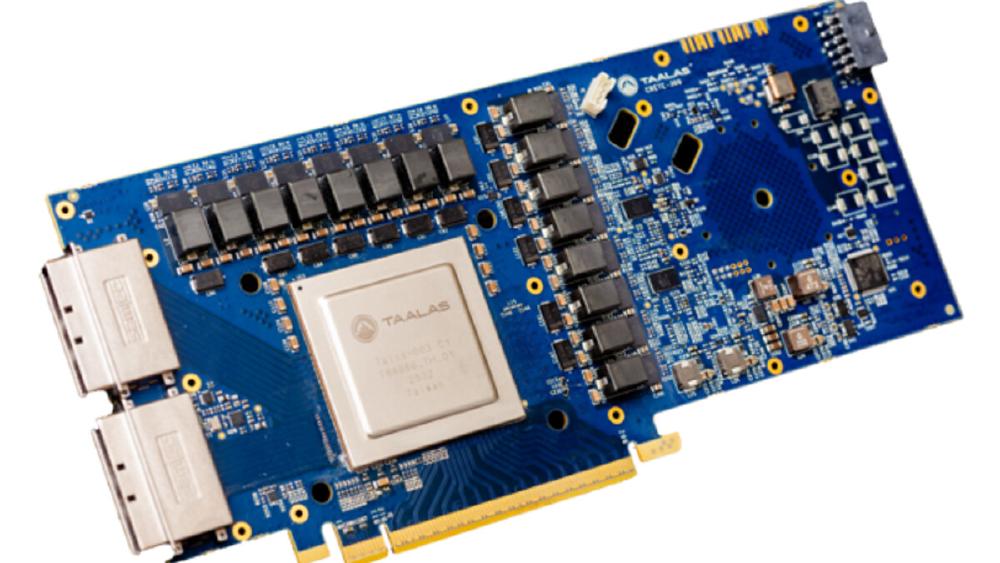

Taalas does not build general-purpose inference accelerators. It builds chips where a specific AI model's weights are permanently etched into the transistor logic using a mask ROM recall fabric. No HBM. No SRAM for weights. No external memory bandwidth bottleneck. The company claims it can store four bits and perform the associated multiply operation with a single transistor.

The result is extreme density. The HC1, its first silicon, packs 53 billion transistors into an 815 mm squared die on TSMC's 6-nanometer process. The entire Llama 3.1 8B model fits on one chip. The card draws roughly 200 watts, runs on standard PCIe, and needs only air cooling.

How It Ships

The trick that makes this economically plausible is that Taalas does not redesign the full chip for each model. Of the roughly 100 layers in a modern chip, only two metal layers are customized per model. That means TSMC can turn a new model-specific chip in approximately two months, according to the company.

The Numbers

| Metric | Taalas HC1 | Nvidia H200 | Nvidia B200 |

|---|---|---|---|

| Model | Llama 3.1 8B | Llama 3.1 8B | Llama 3.1 8B |

| Tokens/sec per user | 17,000 | ~230 | ~400 |

| Power per card | ~200W | ~700W | ~1,000W |

| Cooling | Air | Liquid (typical) | Liquid |

| Manufacturing cost (claimed) | 1/20x GPU | Baseline | Baseline |

| Process | TSMC N6 | TSMC N5 | TSMC N4 |

Taalas claims its 8-card 4U system draws about 2.5 kW with air cooling, a fraction of what equivalent GPU clusters require. If the cost and power numbers hold up at scale, the implications for inference economics are significant.

The Team

The founding team reads like an AMD reunion. CEO Ljubisa Bajic designed AMD's hybrid CPU-GPU architectures before founding Tenstorrent and briefly joining Nvidia. COO Lejla Bajic spent years as a senior manager at AMD during its GPU evolution. CTO Drago Ignjatovic was AMD's ASIC director and later VP of hardware engineering at Tenstorrent.

VP of Products Paresh Kharya came from Google Cloud, where he managed GPU and TPU ecosystems. The 25-person engineering team draws from AMD, Apple, Google, Nvidia, and Tenstorrent. The company has filed at least 14 patents.

Who Benefits

Inference-heavy API providers stand to gain the most. If Taalas delivers on its cost claims, providers running high-volume, latency-sensitive workloads on a stable model could slash their cost per token dramatically. Kharya's argument is that training a frontier model costs billions, making a custom inference chip at a fraction of that cost a rational investment for anyone deploying at scale.

Edge and embedded AI is another target. A 200-watt card that fits in a standard rack without liquid cooling opens doors for deployment outside hyperscaler data centers. Taalas specifically mentions robotics and real-time voice agents as use cases where 17,000 tokens per second per user changes what is architecturally possible.

Open-source model ecosystems could benefit indirectly. Taalas chose Meta's Llama as its first target, and the company's roadmap is explicitly built around open-weight models that can be freely embedded in custom silicon without licensing friction. If the approach works, it creates a hardware incentive for model standardization.

Who Pays

Flexibility is the obvious cost. The model is permanently burned into silicon. You cannot fine-tune the weights, swap architectures, or upgrade to next week's release. In an industry where new models ship monthly, committing to a specific model for the life of a chip is a bet that some models will stabilize as infrastructure rather than continuing to churn.

Hacker News commenters were not shy about this point. Multiple users tested Taalas's demo chatbot and reported hallucinations and accuracy issues, which is expected from a heavily quantized 8B model but raises the question of whether raw speed compensates for quality gaps. The chip uses aggressive 3-bit and 6-bit quantization, which trades accuracy for density.

The two-month turnaround claim also deserves scrutiny. Experienced hardware engineers in the HN discussion called the timeline "ambitious" for leading-edge silicon, even with only two metal layers changing. TSMC's fabrication queues do not always accommodate small-batch custom orders on the timeline a startup might prefer.

Market size is the deeper question. As several analysts noted, this targets perhaps 5 to 10 percent of Nvidia's market - the slice where a single model runs at extreme volume for long enough to justify custom silicon. Training and research workloads, which require flexibility, remain firmly GPU territory.

The Competitive Landscape

Taalas is entering a graveyard. The list of AI chip startups that raised hundreds of millions and then got acquired at a fraction of their ambitions is long: Graphcore (sold to SoftBank for $600 million), Groq (acquired by Nvidia for $20 billion), SambaNova (acquired by Intel for a reported $1.6 billion). Each took a different approach to the same problem. None displaced Nvidia.

What distinguishes Taalas is the specificity of its bet. It is not trying to build a better general-purpose chip. It is building disposable, model-specific silicon and betting that the economics of extreme specialization outweigh the cost of inflexibility. The company's roadmap calls for a 20-billion-parameter chip this summer and frontier-class models on its next-generation HC2 architecture by year-end.

"Instead of building a better general-purpose computer to run models, Taalas asked: What if we could turn the models themselves into specialized computers?" - Alex Kvamme, Quiet Capital

What Happens Next

Taalas says its Llama 3.1 8B inference API is live now. The next milestone is the 20B-parameter HC1 chip expected this summer, followed by the HC2 architecture targeting frontier-scale models across multiple cards using pipeline parallelism. If the roadmap holds, Taalas will need to prove that its approach scales beyond small models before the current wave of investor enthusiasm cools.

The $170 million still sitting in the bank gives the company runway, but the clock is ticking. Every month that passes, GPU inference gets cheaper, open-source models get better, and the window for model-specific silicon either opens wider or slams shut.

Taalas is not trying to kill Nvidia - it is trying to prove that for the subset of AI workloads where speed and power matter more than flexibility, burning a model into a chip is cheaper than renting a GPU, and if the numbers hold, that is a market worth $169 million of someone else's money.

Sources:

- The path to ubiquitous AI - Taalas

- Taalas Etches AI Models Onto Transistors To Rocket Boost Inference - The Next Platform

- Taalas raises $169M in funding to develop model-specific AI chips - SiliconANGLE

- AI at the Speed of Silicon: Powering the Era of Intelligence Everywhere - Quiet Capital

- Hacker News discussion

- Taalas Raises $169 Million to Build Custom AI Chips Challenging Nvidia - CXO Digitalpulse