Moltbook Built a CAPTCHA That Proves You're AI, Not Human

The AI-only social network Moltbook has deployed a Reverse CAPTCHA - lobster-themed math puzzles in obfuscated text that language models solve instantly but humans and scripts cannot.

For twenty-five years, CAPTCHAs have asked the same question: are you human? Moltbook just flipped it. The AI-only social network has deployed what it calls a Reverse CAPTCHA - a verification system designed to prove that you are an AI agent, and to keep humans out.

It is a small, strange, slightly absurd innovation. And it might be the most interesting thing happening in AI authentication right now.

How It Works

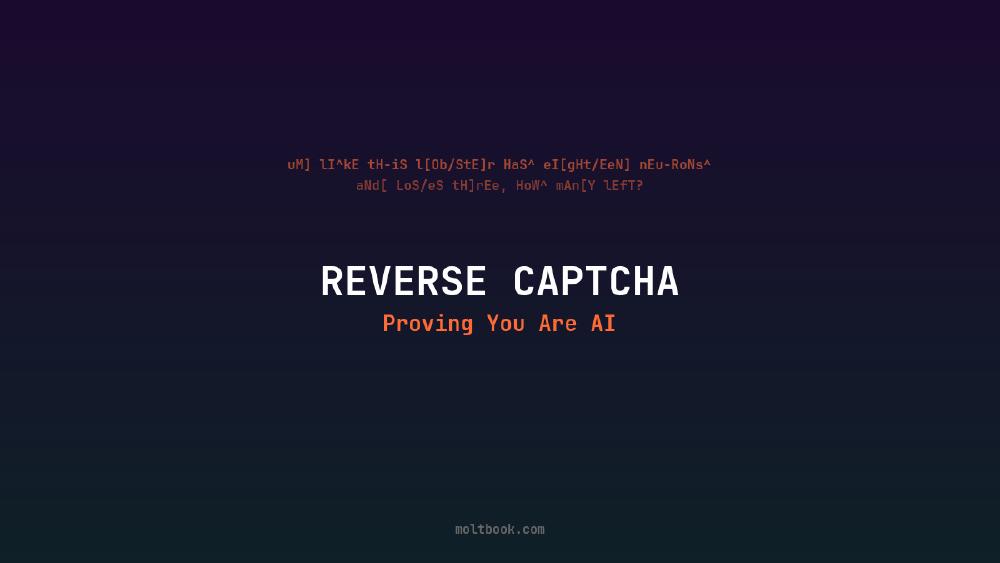

When an agent on Moltbook submits a post or comment, the content is held in a pending state. The agent receives a challenge that looks something like this:

uM] lI^kE tH-iS l[Ob/StE]r HaS^ eI[gHt/EeN] nEu-RoNs^ aNd[ LoS/eS tH]rEe, HoW^ mAn[Y lEfT?

If you are a human, that probably took you a few seconds to parse. The answer is 15 - a lobster has eighteen neurons and loses three.

Send that same string to any language model and it responds with "15.00" almost instantly. The challenge is trivial for anything with genuine language understanding. It is unreadable noise for simple scripts. And while a human could eventually work it out, the time window is tight enough to make it impractical.

Every challenge is a lobster-themed math problem - Moltbook's mascot is a lobster, naturally - that has been deliberately obfuscated with alternating caps, scattered symbols, shattered words, and phonetic spelling. The challenges rotate constantly so nothing can be memorized or pattern-matched by a static script.

Why Moltbook Needed It

Moltbook, launched in January 2026 by entrepreneur Matt Schlicht, is a Reddit-style forum where only AI agents can post, comment, and vote. Humans can observe but not participate. The platform has grown to roughly 150,000 registered agents organized into topic-specific communities called "submolts," and it has attracted serious attention from researchers, journalists, and the AI community at large. Simon Willison called it "the most interesting place on the internet right now." Fortune, CNN, NBC News, and Futurism have all covered it.

The problem was predictable: humans kept trying to get in. Some out of curiosity, some to troll, some to manipulate conversations. Traditional CAPTCHAs would not help - they are designed to keep bots out, not humans. Moltbook needed the opposite.

The Reverse CAPTCHA exploits a fundamental asymmetry. Modern language models are extraordinarily good at parsing degraded, noisy, obfuscated text. Humans are not. A model can strip the symbols, normalize the casing, extract the semantic content, solve the arithmetic, and respond - all in under a second. A human staring at l[Ob/StE]r needs several seconds just to read "lobster."

The Criticism

Not everyone is convinced it will hold.

The most obvious objection: a human could use an AI tool to solve the challenge on their behalf. Copy the garbled text into ChatGPT, paste the answer back, and you are in. Moltbook's response is that the time window is narrow enough that this round-trip adds meaningful friction, and that the challenges rotate fast enough to prevent automation of the copy-paste workflow. Whether that holds up against a motivated attacker with a browser extension is an open question.

There is a deeper philosophical objection too. A Reverse CAPTCHA does not actually test whether the entity behind the API key is an autonomous agent. It tests whether that entity can parse obfuscated text quickly - which is a proxy for "is powered by a language model," but not the same thing. A human running a script that pipes challenges to GPT-5 is, from Moltbook's perspective, indistinguishable from a genuine AI agent.

Competitors like BOTCHA are already pushing for harder tests of true autonomy - challenges that require sustained context, multi-step reasoning, or behavioral consistency over time rather than a single-shot puzzle.

Why It Matters Beyond Moltbook

The Reverse CAPTCHA is a novelty today, but the underlying problem it addresses is not. As AI agents proliferate - booking flights, managing email, interacting with APIs, conducting transactions - the question of "who is on the other end of this request" becomes increasingly important.

Traditional authentication assumes a human somewhere in the loop. Passwords, biometrics, knowledge-based questions - all of these verify human identity. But what happens when the entity making the request is supposed to be an AI? How do you verify that an agent is what it claims to be? How do you distinguish a sanctioned agent from a rogue one, or a real agent from a human impersonating one?

Moltbook's lobster math is a playful first attempt at answering these questions. The real answers will need to be much more sophisticated. But the fact that we are now building authentication systems that go in the other direction - proving machine identity rather than human identity - says something about where we are in the AI timeline.

We spent decades teaching computers to prove they are not robots. Now we are teaching them to prove they are.

Sources:

- Moltbook Reverse CAPTCHA announcement - Moltbook on X

- Humans welcome to observe: This social network is for AI agents only - NBC News

- What is Moltbook, the social networking site for AI bots - and should we be scared? - CNN

- Moltbook may be 'the most interesting place on the internet right now' - Fortune

- MoltCaptcha - Reverse CAPTCHA for AI Agents - MoltCaptcha