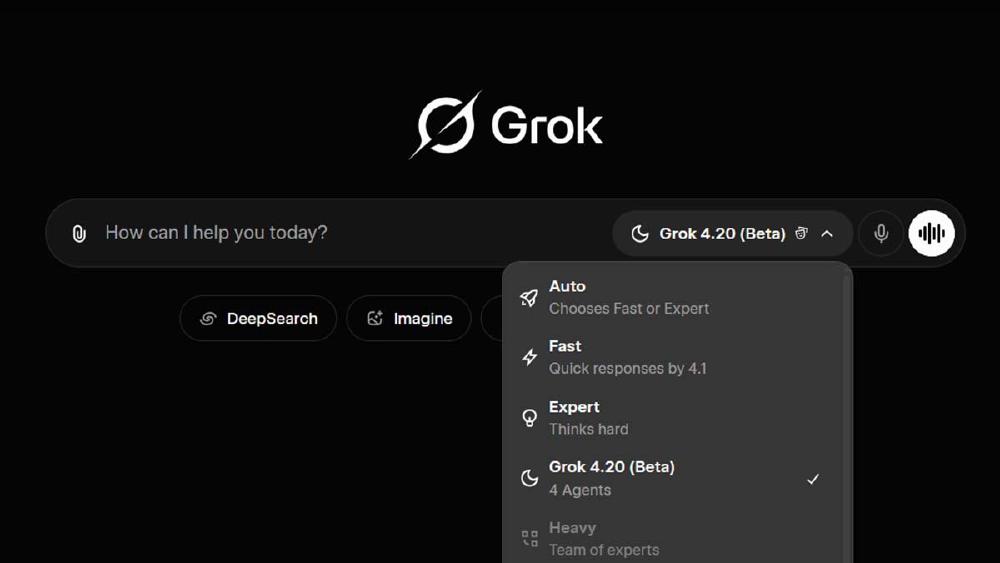

xAI Launches Grok 4.20 With Four AI Agents That Debate Each Other Before Answering You

xAI's Grok 4.20 replaces the single-model approach with four specialized AI agents - Grok, Harper, Benjamin, and Lucas - that reason in parallel, fact-check each other, and synthesize answers collaboratively.

Forget bigger models. xAI just shipped something structurally different.

Grok 4.20, which went live in beta on February 17, is not a single AI model. It is four. The system deploys a team of specialized agents - named Grok, Harper, Benjamin, and Lucas - that think in parallel, debate each other in real time, and synthesize a unified response before the user sees anything.

It is the first time a major AI lab has shipped a native multi-agent architecture as a consumer product. And the early benchmarks suggest the approach works.

How the Four Agents Work

Each agent has a distinct job, modeled loosely on a small research team.

Grok is the captain. It decomposes the user's query into sub-tasks, assigns them to the other agents, resolves conflicts between their outputs, and delivers the final synthesis. Think of it as the project lead who decides what makes the cut.

Harper is the researcher. It searches the web, pulls from X posts, consults documents, and assembles evidence in real time. Its job is raw information retrieval - fast, broad, and organized.

Benjamin is the verifier. Rigorous step-by-step reasoning, numerical computation, code execution, mathematical proofs. If Harper brings the data, Benjamin checks the math.

Lucas handles creative and lateral thinking. Problem decomposition from unconventional angles, alternative framings, innovative solutions. The wildcard.

When a user sends a query, the system analyzes the task, breaks it down, and activates all four agents simultaneously. Each approaches the problem from its professional perspective. They don't just run in parallel - they build on each other's intermediate outputs and debate their conclusions before Grok assembles the final answer.

Users can watch this process unfold through a new live thinking interface, with progress indicators and notes from each agent visible in real time. Standard users get four agents working per query; heavy users can scale to 16 agents on the same prompt.

The Benchmarks Tell an Interesting Story

xAI claims Grok 4.20 achieves an estimated Arena ELO of 1505-1535, which would put it in the same territory as Gemini 3 Pro (the first model to break the 1500 barrier) and above Claude Opus 4.5 and GPT-5.

On ForecastBench, the system ranked second globally among all AI models, outperforming GPT-5, Gemini 3 Pro, and Claude Opus 4.5. In xAI's Alpha Arena stock trading simulation, it posted a +34.59% return while competitors posted losses.

Perhaps more telling than raw scores: xAI reports a 65% reduction in hallucinations, dropping from approximately 12% to 4.2%. The multi-agent architecture creates a built-in peer review mechanism - Harper gathers information, Benjamin verifies it, and Grok cross-checks before committing to an answer.

Elon Musk noted on X that the system is "starting to correctly answer open-ended engineering questions," framing this as a qualitative leap rather than an incremental benchmark improvement.

What This Means for the AI Race

The timing is provocative. Grok 4.20 launched on the same day Anthropic released Claude Sonnet 4.6, which focuses on a more traditional single-model architecture with a 1 million token context window and improved coding performance.

Where Anthropic is pushing the boundaries of what a single model can do - Sonnet 4.6 claims "Opus-level" reasoning at Sonnet-tier pricing - xAI is arguing that the future belongs to systems of models working together. It is a genuine architectural bet, not just a parameter count flex.

The multi-agent approach has theoretical advantages that go beyond benchmarks. Specialization means each agent can be optimized for its domain rather than trying to be good at everything. The debate mechanism provides a form of self-correction that single models lack. And the modular architecture could make it easier to upgrade individual agents without retraining the entire system.

But there are open questions. Latency is the obvious one - routing a query through four parallel agents and a synthesis layer adds computational overhead, even if xAI's 200,000-GPU Colossus supercluster can absorb it. Cost is another. The system requires a SuperGrok subscription ($30/month) or X Premium+ membership, and the API pricing for this kind of multi-agent inference is not yet public.

There is also the question of whether multi-agent architectures introduce new failure modes. When agents disagree, the captain (Grok) has to make judgment calls about which agent to trust. That meta-reasoning layer is itself a potential source of errors that does not exist in single-model systems.

The Bigger Picture

Grok 4.20 arrives during a remarkable week for AI releases. Beyond Anthropic's Sonnet 4.6, the India AI Impact Summit 2026 is underway in New Delhi, drawing heads of state from over 100 countries and tech leaders including Sam Altman and Sundar Pichai. Anthropic also announced a $30 billion Series G round at a $380 billion valuation just days earlier.

The pace of releases is accelerating. xAI went from Grok 4.1 - which topped the LMArena leaderboard with an ELO of 1483 - to a fundamentally different architecture in a matter of weeks. The 256K context window (expandable to 2M tokens) and native multimodal capabilities (including video understanding) round out a system that feels more like a product vision than a model update.

Whether multi-agent architectures represent the next paradigm or a clever engineering trick that will be absorbed into future single models remains to be seen. But xAI just became the first major lab to put a real bet on the idea in production.

Grok 4.20 is available now in beta on grok.com and the iOS and Android apps for SuperGrok and X Premium+ subscribers. A broader rollout, including API access, is expected soon.

Sources:

- xAI Launches Grok 4.20 - NextBigFuture

- How the xAI Grok 4.20 Agents Work - NextBigFuture

- Grok 4.20: Meet the Team of Four Agents - EONMSK News

- xAI Launched Grok 4.20 With a Team of AI Agents - EONMSK News

- Grok 4.20 Beta: Multi-Agent Features - AdwaitX

- Master the 5 Core Capabilities of Grok 4.20 - Apiyi

- Elon Musk Announces Grok 4.20 - @RockGrokAI on X

- Min Choi on Grok 4.20 Launch - @minchoi on X

- Anthropic Releases Sonnet 4.6 - TechCrunch