Apple Bets Big on AI Wearables With Smart Glasses, Pendant, and Camera AirPods

Apple is accelerating development of three AI wearable devices built around a Gemini-powered Siri, setting up a direct collision with Meta in a market projected to quadruple this year.

Apple is building three AI-powered wearable devices - smart glasses, a wearable pendant, and camera-equipped AirPods - in what amounts to the most aggressive hardware pivot the company has made since the Apple Watch launch a decade ago.

The details come from a Bloomberg report published on February 17, which describes prototypes already in the hands of Apple's hardware engineering teams and production timelines stretching into late 2026. All three devices are designed around an upgraded version of Siri, which Apple is rebuilding from the ground up using Google's Gemini AI models under a deal reportedly worth around $1 billion per year.

The strategic question is straightforward: can Apple catch Meta in a wearable AI market that analysts expect to grow from $1.2 billion to $5.6 billion in 2026 alone?

The Three Devices

Smart glasses are the flagship play. Apple's version will feature a dual-camera system - one high-resolution camera for photos and video, and a second camera that feeds visual context to Siri for real-time object recognition, navigation, and scene understanding. There will be no display in the lenses. Interaction is entirely voice-based.

Apple designed the frames in-house rather than partnering with an established eyewear brand, a deliberate departure from the approach Google is taking with its Warby Parker and Kering partnerships. The glasses will ship in multiple sizes and colors using premium materials including acrylic elements. Production is targeted for as early as December 2026, with a 2027 launch.

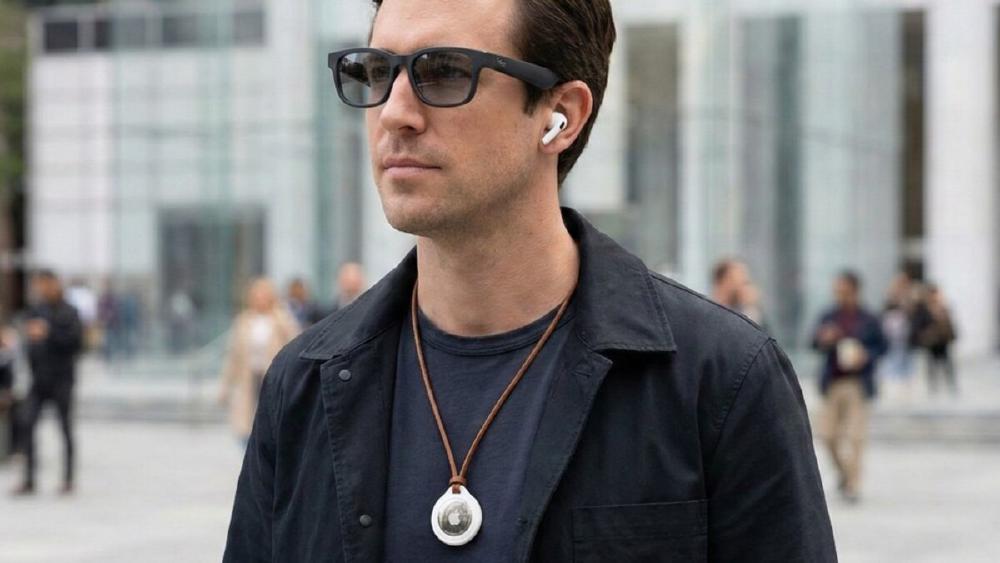

The AI pendant is more experimental. Bloomberg describes it as a thin, flat, circular disc with an aluminum-and-glass shell, two cameras, a speaker, and three microphones. It clips to clothing or hangs from a necklace. Some Apple employees internally call it the "eyes and ears" of the iPhone - an always-on camera and microphone that gives Siri persistent visual context about the wearer's environment.

The pendant is explicitly designed as an iPhone accessory, not a standalone device. Processing happens on the phone. Apple is targeting a possible 2027 launch, though development is early enough that the entire project could still be canceled.

Camera-equipped AirPods are the furthest along. The new AirPods would include a low-resolution camera designed to give AI a view of the world rather than capture photos. Think of it as ambient context for an AI assistant that can see what you see. These could ship as early as late 2026.

The Siri Problem

Every one of these devices depends on a Siri that does not yet exist.

Apple announced in January a multiyear partnership with Google to rebuild Apple Foundation Models on top of Gemini's technology. The next-generation Siri, expected in iOS 27, will reportedly feature chatbot-like conversational abilities, on-device processing through Apple's private cloud infrastructure, and the kind of contextual reasoning that makes a voice-first wearable actually useful.

The problem is timing. Apple's iOS 26.4 beta, released in recent weeks, still did not include major new Apple Intelligence features. The company has a documented pattern of hardware readiness outpacing software capability - as we discussed in our coverage of Apple's earlier GPT-5 integration plans - and a Siri that cannot reliably handle complex queries would undermine the entire value proposition of a display-less pair of smart glasses.

Google's Gemini models are genuinely capable in multimodal reasoning, but the question is whether Apple can integrate that capability into its privacy-first architecture without crippling the performance that makes the underlying models competitive in the first place.

Following the Money

Apple is entering a market that Meta currently owns. Ray-Ban Meta smart glasses have sold over 2 million units, and Meta is scaling production capacity to 10 million annual units by the end of 2026. The glasses are already the top-selling product in 60% of Ray-Ban's EMEA stores.

Meta has a three-year head start, a proven retail partnership with Luxottica, and a willingness to subsidize hardware for ecosystem growth. Apple's counter-strategy appears to be the same one it has used in every hardware category since the iPhone: enter late, charge more, and win on integration.

The AI smart glasses market is projected to grow from 6 million units in 2025 to 20 million units in 2026, with revenue quadrupling to $5.6 billion, according to Smart Analytics Global. By 2030, the category could exceed $30 billion. Apple, Samsung, and Meta are expected to emerge as the top three vendors.

Google is also preparing to enter the space, developing smart glasses in partnership with Warby Parker and luxury group Kering, with a first product expected in 2026. Samsung has its own efforts underway. The race is getting crowded fast.

The Humane Question

The AI pendant will inevitably draw comparisons to Humane's AI Pin, a device that raised $230 million in venture capital before launching to brutal reviews and collapsing sales. Humane tried to sell the AI Pin as a smartphone replacement. It could not deliver.

Apple is taking the opposite approach. The pendant is designed as an accessory, not a replacement. It relies entirely on the iPhone for processing. There is no screen, no projector, no pretension of standalone utility. It exists to give Siri eyes, nothing more.

That is a smarter bet than Humane made. But it also raises a question: if the pendant is just an iPhone peripheral, why not just hold up the phone? The answer has to be convenience - persistent, ambient AI that works without a conscious decision to invoke it. Whether consumers will pay for that convenience, and whether the privacy implications of an always-on camera worn around the neck will generate regulatory scrutiny, remains to be seen.

The UK this week ordered platforms to remove nonconsensual AI-generated intimate images within 48 hours. Ireland's Data Protection Commission opened a formal investigation into X's Grok chatbot over deepfake imagery. The regulatory environment for AI-powered cameras in public spaces is tightening everywhere.

What This Means for Apple's AI Strategy

The wearable push is the clearest signal yet that Apple views AI not as a software feature to be bolted onto existing products, but as a hardware category of its own. The company is spending roughly $1 billion per year on Google's AI models, designing its own silicon for on-device inference, and now building three entirely new product lines around the idea that AI needs to see the world to be genuinely useful.

If Siri delivers, Apple's ecosystem advantages - privacy controls, hardware-software integration, a billion active iPhones as processing backends - could make its wearables the default choice for consumers already locked into the Apple ecosystem. That is a massive addressable market.

If Siri does not deliver, Apple will be selling expensive glasses and a pendant that cannot do what the marketing promises. The company has been in that position before with Apple Intelligence. It cannot afford to be there again.

The prototypes exist. The partnerships are signed. The market is growing. Now Apple has to ship software that justifies the hardware - and do it before Meta's head start becomes insurmountable.

Sources:

- Apple Ramps Up Work on Glasses, Pendant and Camera AirPods for AI Era - Bloomberg

- Apple Working on Three AI Wearables: Smart Glasses, AI Pin, and AirPods With Cameras - MacRumors

- Apple accelerating work on three new AI wearables, per report - 9to5Mac

- AI Smart Glasses to Quadruple Revenue in 2026 - BusinessWire

- Apple picks Google's Gemini to run AI-powered Siri - CNBC

- Joint statement from Google and Apple