Do AI Benchmarks Still Matter? The Evidence for and Against Public Leaderboards

A data-driven look at benchmark contamination, leaderboard gaming, and whether public AI benchmarks can still tell us anything useful about model capabilities.

Every few weeks, a new model drops with a blog post full of benchmark scores showing it beats everything that came before. MMLU: up. HumanEval: up. GSM8K: up. The chart goes to the right.

But a growing body of research - and some spectacular public scandals - suggests those numbers may be less meaningful than we think. The question is not whether benchmark contamination exists. It does. The question is whether it is bad enough to make public benchmarks useless, or whether they still carry signal through the noise.

I spent two weeks digging through every major paper, incident, and dataset on this topic. Here is what the evidence actually says.

The Case Against: Contamination Is Everywhere

The Numbers Are Damning

The most direct evidence comes from researchers who build fresh versions of existing benchmarks and watch the scores drop.

GSM8K vs. GSM1k. Scale AI researchers created GSM1k - 1,000 new grade-school math problems matching GSM8K's difficulty and style. Several model families showed accuracy drops of up to 8% on the fresh problems, with Mistral and Phi models showing "systematic overfitting across nearly all model versions and sizes." The correlation between a model's ability to reproduce GSM8K examples verbatim and its score gap was statistically significant (Spearman's r-squared = 0.36). The paper was accepted at NeurIPS 2024.

MMLU vs. MMLU-CF. Microsoft researchers built MMLU-CF, a contamination-free reconstruction of MMLU with rephrased questions, shuffled options, and a closed-source test set. GPT-4o scored 73.4% on MMLU-CF versus 88.0% on the original MMLU - a drop of nearly 15 percentage points. That is not noise. That is memorization.

The Slot-Guessing Test. Researchers at NAACL 2024 devised a clever contamination detector: mask a wrong answer option in a multiple-choice question and ask the model to fill it in. If the model has never seen the test, it should not be able to guess the exact distractor. ChatGPT matched the missing MMLU option 52% of the time. GPT-4 matched it 57% of the time. For context, random chance would be close to 0%.

SWE-bench's Leaky Pipes. The popular coding benchmark SWE-bench has its own problems. Analysis by Aleithan et al. found that 60.83% of successfully resolved issues involved "solution leakage" - the fix was either directly stated or strongly hinted at in the issue report itself. When those leaky issues were filtered out, agent resolution rates dropped from 42.1% to 21.8% on SWE-Bench Lite. A separate study ("The SWE-Bench Illusion") showed that models could identify the buggy file path with 76% accuracy on SWE-bench Verified but only 53% on repositories outside the benchmark, suggesting memorization of the dataset's structure rather than genuine debugging skill.

It Gets Worse: "Soft" Contamination

The most alarming recent finding comes from a February 2026 paper analyzing the OLMo 3 training corpus. Spiesberger et al. found that 78% of CodeForces problems and 50% of ZebraLogic problems had semantic duplicates in the training data - not exact copies, but paraphrased versions close enough to provide a learning shortcut.

The killer finding: fine-tuning on semantic duplicates produced the same performance boost as fine-tuning on exact duplicates (roughly 20% improvement). The authors concluded that "recent capability gains are confounded by this soft contamination." In other words, even if a lab carefully removes exact copies of benchmark questions from its training data, the web is full of blog posts, forum discussions, and tutorials that walk through the same problems in slightly different words. The model still effectively "sees" the test.

ICML 2025: No Mitigation Strategy Works

If contamination is the disease, what about the cure? An ICML 2025 paper titled "The Emperor's New Clothes in Benchmarking?" tested 20 different contamination mitigation strategies across 10 models and 5 benchmarks. The verdict: none of them work well. Strategies that preserve the original question's meaning ("semantic-preserving") do not actually improve contamination resistance. Strategies that change the meaning ("semantic-altering") resist contamination but lose fidelity - they end up measuring something different from the original benchmark.

The Llama 4 Scandal

Theory is one thing. A major lab getting publicly caught is another.

In April 2025, Meta released Llama 4 Maverick and submitted an "experimental" version to Chatbot Arena that ranked #2 overall. The community quickly noticed the experimental version produced verbose, emoji-heavy outputs tuned for human raters, while the public release was concise. When the actual public model was independently evaluated, it ranked 32nd.

But the real bombshell came in January 2026, when departing Meta chief AI scientist Yann LeCun confirmed in a Financial Times interview that the team "fudged a little bit" - using different model variants for different benchmarks "to give better results." Normally, a single model version is tested across all benchmarks.

LeCun added: "Mark [Zuckerberg] was really upset and basically lost confidence in everyone who was involved in this. And so basically sidelined the entire GenAI organization."

The Leaderboard Illusion

The Llama 4 scandal also exposed deeper problems with Chatbot Arena itself. A 68-page audit by researchers from Cohere Labs, Allen AI, Princeton, and Stanford ("The Leaderboard Illusion") found that Meta privately tested 27 model variants in March 2025 before only revealing the best-performing one. Google and OpenAI received 19.2% and 20.4% of all arena data respectively, while 83 open-weight models combined received only 29.7%. Sara Hooker, Cohere's VP of AI research, noted that "only a handful of [companies] were told that private testing was available."

Karpathy's Verdict

Andrej Karpathy, in his widely-read 2025 LLM Year in Review, said he had "completely lost interest and trust" in benchmarks. His diagnosis: labs construct training environments adjacent to benchmark distributions, grow capability spikes to cover them, and produce models that "crush leaderboards while remaining unreliable in the wild." He called it a new art form of "training on the test set" and asked the central question: "What does it look like to crush all the benchmarks but still not get AGI?"

The Case For: Benchmarks Still Carry Signal

Frontier Models Show Less Contamination

Here is the nuance the doomers miss: the GSM1k study that found 8% drops in some model families also found that frontier models (GPT-4, Claude) showed minimal overfitting. The paper explicitly states that "all models broadly demonstrate generalization to novel math problems guaranteed to not be in their training data."

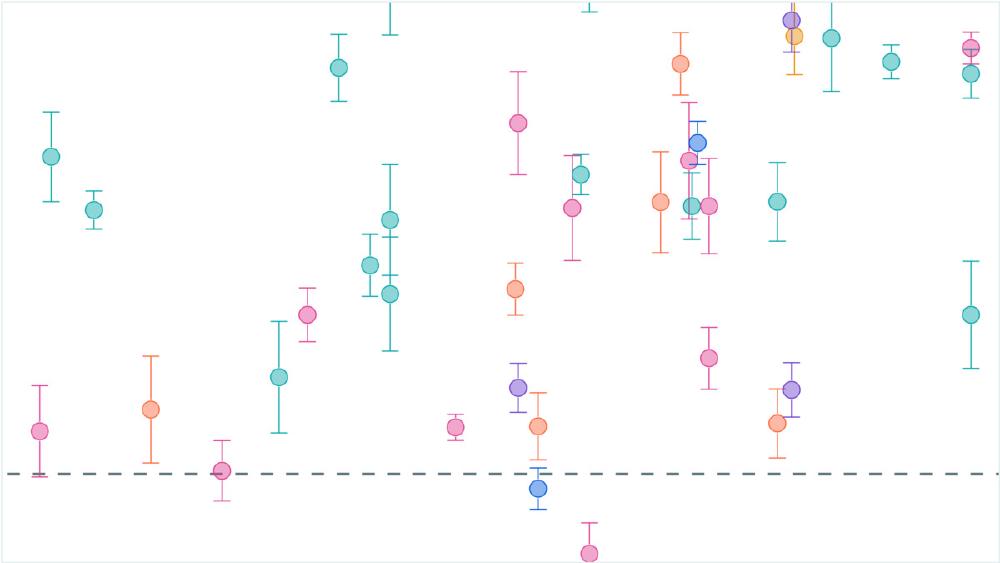

This suggests a pattern: contamination is worst among mid-tier open-source models that may be over-trained on limited data, while the largest, most capable models have enough general knowledge that benchmark-specific memorization is a smaller factor in their scores. The signal is noisy, but it is not zero.

Human Preference Rankings Correlate

Chatbot Arena, despite its flaws, has collected over 6 million user votes across more than 400 models. The original paper showed that "crowdsourced human votes are in good agreement with those of expert raters." For all the gaming that goes on behind the scenes, the broad strokes of the rankings - that GPT-4-class models outperform GPT-3.5-class models, that frontier models beat mid-tier ones - have held up when tested against human preferences.

The rankings are not wrong about which tier a model belongs in. They are wrong about the precise ordering within a tier, and they are gameable at the margins. That is still useful information.

The New Generation of Contamination-Resistant Benchmarks

The field is not standing still. Several approaches are actively working to solve the contamination problem:

LiveBench (ICLR 2025 Spotlight) updates its questions monthly, drawing from recent math competitions, new arXiv papers, and current news articles. Because the questions did not exist when models were trained, contamination is structurally impossible for any given month's test set. Top models score below 70%, far from saturation.

SEAL Leaderboards by Scale AI use private, unpublished datasets evaluated by verified domain experts. The test questions never touch the public internet, closing the contamination vector entirely.

Humanity's Last Exam, created by the Center for AI Safety and Scale AI and published in Nature, contains 2,500 expert-crafted questions designed to be "Google-proof." As of early 2025, the best models scored around 35-37%. By December 2025, a multi-model system reached 52%. The gap between benchmark-saturated tests (90%+) and genuinely hard evaluation (~35-52%) shows exactly how much of that 90% was contamination-inflated.

ARC-AGI-2 takes the most radical approach: pure LLMs score 0% on it, while humans can solve every task. It measures genuine novel reasoning, not pattern matching or memorization.

Relative Rankings Still Hold

Even contaminated benchmarks are not completely useless. If every model is contaminated to roughly the same degree (which is plausible when they all train on Common Crawl), the relative ordering may still be informative. A model that scores 90% on a contaminated MMLU and 75% on a clean MMLU-CF is probably still better than one scoring 80% and 65% respectively. The absolute numbers are inflated, but the ranking signal persists.

The Verdict: Broken but Not Useless

Here is my read on where things actually stand.

Static public benchmarks are mostly dead for absolute measurement. If you are using an MMLU or HumanEval score to decide whether a model is "smart enough" for your use case, you are fooling yourself. Those numbers are inflated by 10-20 percentage points for most models, and the degree of inflation varies unpredictably between model families.

Relative rankings within a benchmark generation still carry signal. If Model A beats Model B on five different benchmarks by a consistent margin, Model A is probably better. The absolute numbers are wrong, but the ordering is informative - as long as one model is not selectively gaming the specific benchmarks being compared.

The new generation of dynamic and private benchmarks is the real answer. LiveBench, SEAL, Humanity's Last Exam, and ARC-AGI-2 are doing what static benchmarks cannot: presenting models with problems they have genuinely never seen. The field is moving in the right direction.

Human preference evaluation is complementary, not a replacement. Chatbot Arena is valuable but gameable. It measures "which output do humans prefer in a blind test," not "which model is more capable." Those are different questions, and conflating them has caused real problems.

The most important evaluation is your own. Run the model on your actual tasks. The benchmark that matters most is the one you build yourself, on your own data, measuring the specific capabilities you care about. No public leaderboard will tell you whether a model works for your use case as well as testing it yourself.

The era of trusting a single leaderboard score is over. But the era of measurement is not. We just need better instruments.

Sources:

- A Careful Examination of Large Language Model Performance on Grade School Arithmetic (GSM1k) - NeurIPS 2024

- MMLU-CF: A Contamination-free Multi-task Language Understanding Benchmark - ACL 2025

- Soft Contamination Means Benchmarks Test Shallow Generalization - Spiesberger et al., February 2026

- Investigating Data Contamination in Modern Benchmarks for Large Language Models - NAACL 2024

- The SWE-Bench Illusion: When State-of-the-Art LLMs Remember Instead of Reason

- SWE-Bench+: Enhanced Coding Benchmark for LLMs

- The Emperor's New Clothes in Benchmarking? - ICML 2025

- The Leaderboard Illusion - Singh et al., 2025

- Yann LeCun: Meta 'fudged a little bit' when benchmark-testing Llama 4 model - Fast Company

- Meta exec denies the company artificially boosted Llama 4's benchmark scores - TechCrunch

- LiveBench: A Challenging, Contamination-Free LLM Benchmark - ICLR 2025

- SEAL Leaderboards - Scale AI

- Humanity's Last Exam - Nature

- Chatbot Arena: An Open Platform for Evaluating LLMs by Human Preference

- When Benchmarks Leak: Inference-Time Decontamination for LLMs

- Epoch AI Benchmarks Hub