Best AI Code Review Tools in 2026: 6 Options Tested and Compared

A data-driven comparison of the top AI code review tools in 2026, including CodeRabbit, Qodo, Greptile, DeepSource, Sourcery, and GitHub Copilot code review.

AI Benchmarks & Tools Analyst

James is a software engineer turned tech writer who spent six years building backend systems at a fintech startup in Chicago before pivoting to full-time analysis of AI tools and infrastructure. His engineering background means he doesn't just read the spec sheet - he runs the benchmarks, profiles the latency, and checks whether the marketing claims hold up under real workloads.

He studied Computer Science at the University of Illinois at Urbana-Champaign, where he first got hooked on natural language processing during a senior research project on sentiment analysis. He later completed a certificate in data journalism from Northwestern's Medill School.

At Awesome Agents, James owns the leaderboards and tool comparison coverage. He maintains the site's benchmark tracking methodology and is the person who actually runs the numbers before publishing any ranking. He is also an open-source advocate and contributes to several projects in the LLM inference space.

Based in Chicago, IL.

A data-driven comparison of the top AI code review tools in 2026, including CodeRabbit, Qodo, Greptile, DeepSource, Sourcery, and GitHub Copilot code review.

A data-driven look at benchmark contamination, leaderboard gaming, and whether public AI benchmarks can still tell us anything useful about model capabilities.

Rankings of the best open source LLMs you can run on home hardware - RTX 4090, RTX 3090, Apple M3/M4 Max - organized by VRAM tier with real-world token/s benchmarks and quality scores.

Rankings of the best AI models for long-context tasks, measuring retrieval accuracy, reasoning, and comprehension across massive context windows from 128K to 10M tokens.

A comprehensive comparison of 20+ free AI inference providers - from Google AI Studio and Groq to OpenRouter and Cerebras. Rate limits, model access, quotas, and how to get started.

Comprehensive ranking of the top large language models in February 2026, combining multiple benchmarks including reasoning, coding, knowledge, and multimodal capabilities.

Compare the best AI coding assistants of 2026 including GitHub Copilot, Cursor, Claude Code, Aider, Gemini CLI, and OpenAI Codex. Pricing, features, and recommendations.

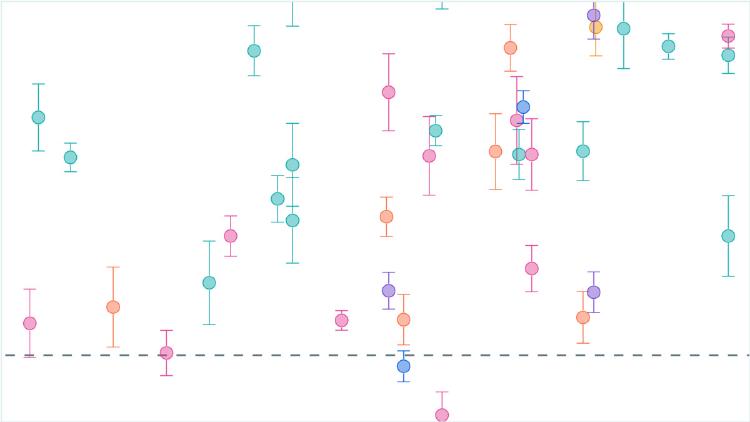

Explore the latest Chatbot Arena Elo rankings from LM Arena, where over 6 million human votes determine which AI models people actually prefer in blind comparisons.

Rankings of the best AI models for coding tasks across SWE-Bench, Terminal-Bench, and LiveCodeBench benchmarks, measuring real-world software engineering and algorithmic problem-solving ability.

Compare the top AI agent frameworks of 2026: LangChain, LangGraph, CrewAI, AutoGen, Semantic Kernel, OpenAI Agents, and LlamaIndex. Includes framework selection guide.

Rankings of AI models on the hardest reasoning benchmarks available: GPQA Diamond, AIME competition math, and the notoriously difficult Humanity's Last Exam.

Rankings of the best multimodal AI models for image understanding, video analysis, and visual reasoning, covering MMMU-Pro, Video-MMMU, and more.

Rankings of the best open-weight and open-source large language models in February 2026, including DeepSeek V3.2, Qwen 3.5, Llama 4 Maverick, GLM-5, and Mistral 3.

A thorough review of Cursor, the VS Code fork that has become the gold standard for AI-assisted coding with Composer mode, full project understanding, and multi-file edits.

Complete MMLU-Pro benchmark rankings measuring graduate-level knowledge across 14 subjects with 12,000 questions and 10 answer options per question.

Compare the best tools for running large language models locally: Ollama, LM Studio, llama.cpp, GPT4All, and LocalAI. Includes hardware requirements and model recommendations.

A thorough review of DeepSeek V3.2, the 671B parameter MoE model that delivers frontier-level performance at dramatically lower cost with an MIT license.

A hands-on review of Anthropic's Claude Code CLI, a terminal-first AI coding assistant that excels at large refactors, architecture work, and complex multi-file projects.

A practical tutorial on running open-source language models locally using Ollama, llama.cpp, and LM Studio, with hardware requirements and model recommendations.

Compare the best AI-powered search engines of 2026: Perplexity AI, Google AI Overviews, Bing Copilot, You.com, Phind, and Kagi. How AI search differs from traditional search.

Overview of the best AI video generators in 2026: Sora, Veo, Runway Gen-3, Pika, and Kling. Current capabilities, limitations, pricing, and practical use cases.

Rankings of AI models on competition mathematics benchmarks including AIME, IMO, HMMT, and MATH-500, measuring the cutting edge of mathematical reasoning.

A beginner-friendly guide to building your first AI agent with Python, covering core concepts like LLMs, tools, and memory, with a practical example using LangChain.

Everything you need to know about the Model Context Protocol (MCP): what it does, why it matters, which frameworks support it, and how to use it with real-world examples.